Docker

Docker is a lightweight, portable, Linux container engine. Think of it as a shipping container system

for code! It enables any payload and its

dependencies to be encapsulated. It’s hardware agnostic1 and uses OS primitives

like LXC to run consistently on virtually any hardware. The same container that a developer builds

and tests on a laptop can run at scale, in production, on VMs, OpenStack, bare

metal, IAAS – without modification.

For developers it allows for environment isolation and

repeatability which is great for both testing and development

environments.

Imagine on-boarding a new engineer into your

organization. The process usually

involves them spending hours or days setting up their development environment

with all the tools, systems, associated dependencies and configuration required

for them to do their job. This manual

process is time consuming and prone to error.

Using a Docker container, the environment can be created once and

distributed to the new engineer allowing them to ‘push a button’ and

immediately become productive in your engineering and production environment.

Docker is also great for dynamically spinning up instances

of your infrastructure. That could be

your automated testing and CI environment, QA infrastructure, or deployment and

scaling of your production web app, database and backend services. These instances are efficient, consistent and

repeatable. It eliminates the

inconsistencies between development, test and production environments and

because the containers are so lightweight they can address significant

performance, cost, deployment and portability issues normally associated with

VMs.

In Docker parlance an Image is a read-only Layer that never

changes. Docker uses a Union File System so the processes think the whole file

system is mounted read-write but all the changes go to the top most writable

layer and underneath the original files in the read-only image remains

unchanged. Each image may depend on one

or more images that form the layers beneath it. You create an image by making changes to a

container (instance of an image) and committing it, much like you commit

changes to your source code with a VCS like GIT or Subversion.

Docker containers can change and have state. Container state is either running or exited. When a container is

running it includes a tree of processes running on the CPU that are isolated

from the other processes running on the host.

To accomplish this isolation Docker uses Linux cgroups.

This brings us to how Docker works. It uses Linux Containers (LXC), cgroups,

AUFS, and has a client – server model with a RESTy HTTP API.

LCX lets you run a Linux system within another Linux

system. From the inside it looks like a

VM and from the outside it looks like a group of normal processes. Containers are fast and have a small

footprint. You can boot them in seconds

and cram hundreds or thousands on one machine.

With

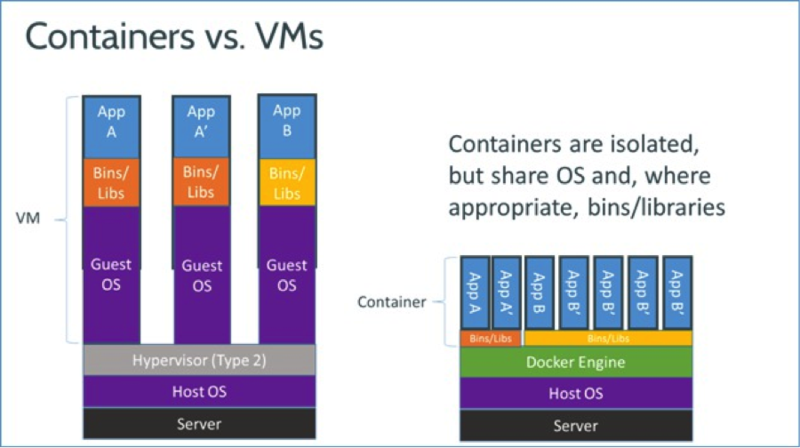

Docker Containers, the efficiencies are even greater. With a traditional VM,

each application, each copy of an application, and each slight modification of

an application require creating an entirely new VM.

As

shown above, a new application on a host need only have the application and its

binaries/libraries. There is no need for a new guest operating system.

If

you want to run several copies of the same application on a host, you do not

even need to copy the shared binaries.

If

you make a modification of the application, you need only copy the differences.

Linux cgroups are a kernel feature that Docker leverages to

facilitate limiting, accounting and isolating of resource usage such as CPU,

disk and memory.

Docker also leverages AUFS for union mounting of file

systems with copy-on-write.

Docker has a vibrant community with 6000+ GitHub

stars, 150+ contributors, 100’s of projects and over 1700 ‘Dockerized’

applications on GitHub.

Docker + Glassfish + Oracle JDK

I recently added to the number of Dockerized applications by creating a Docker image containing Oracle JDK 1.7.0_40 and Glassfish 4.0 promoted build 3.

Glassfish is an open source, light-weight, modular, super fast, JavaEE application server. It's an Oracle backed project (originally started by Sun) which has served as the reference implementation of the JavaEE specs since EE5. Java application servers get knocked as being bloated and slow, and Enterprise Java (JavaEE) gets knocked as being hard and combersome to develop with. Those knocks might have been true 7 or 8 years ago but ever since EE5 (released in 2006) and Glassfish they're simply FUD. Glassfish is modular, based on OSGi, and because of this it's fast and lean. It will only start the parts/subsystems required by your application keeping boot times fast and memory overhead low. Glassfish is also filled with features to make managing it a breeze. It has a fantastic REST based management API, a full featured administration web UI, a CLI API, and extensive JMX integration.

To use this and other Docker images you will first need to

install Docker. Docker installs natively on Ubuntu Linux but can also run on

other Linux distributions, Mac OS X and Windows. Instructions for installing Docker can be

found here: http://docs.docker.io/en/latest/installation/

For my environment I have Ubuntu 3.8.0 29 x64 running in

Parallels 9 on a MacBook Pro running 10.8.5.

Once you have Docker installed you can pull down the images

I’ve pushed to the public repository.

The first image contains Glassfish 4.0 promoted build 3 and

Oracle JDK 1.7.0_40. To get that one

run:

$>sudo docker pull noah/glassfish4-p3_jdk7-40

The second image builds off of the 1st but has

additional Glassfish configurations committed to it. Namely:

- The admin password has been set to ‘adminadmin’

- A ‘asadmin login’ has been performed such that

the admin credentials have been cached to /root/.gfclient

- enable-secure-admin has been applied to domain1

To get the second image run:

$>sudo docker pull noah/glassfish4

After you have pulled one of these images you can see that

you have it locally with:

$>sudo docker images

To instantiate a container from an image use the docker run

command. For example to instantiate a

container from the second image run:

$>sudo docker run noah/glassfish4

This will start a container from that image. You can view

the running container with:

$>sudo docker ps

ID IMAGE COMMAND CREATED STATUS PORTS

733576a1a96a noah/glassfish4:latest /usr/local/bin/start 19 minutes ago Up 19 minutes 49599->4848, 49600->8080, 49601->8181, 49602->8686, 49603->7676, 49604->3700, 49605->3820, 49606->3920

Notice the output from the docker ps command. The container

ID is displayed, the image the container was started from, the command running

in the container, how long it has been running, its current status and the

ports being forwarded.

As I mentioned earlier Docker containers are instances of an

image. So in this case 733576a1a96a is

an instance of noah/glassfish4:latest.

When a container runs it executes a command. This command can be either

specified as part of the ‘docker run’ command, or can be specified when you

build the image using a Dockerfile (more on that in a later post). In this case because we did not specify one it

used the one baked in the image, which is a shell script that configures the

host name of the container and then starts glassfish using asadmin. You can find the Dockerfile used to build

this image on GitHub:

https://github.com/noahwhite/docker-glassfish4

Containers by default do not expose ports, and when they do

they are port forwarded from external to the container to internal to the

container. You can see the list of exposed port forwards in the output of the

docker ps command above.

For example, the Glassfish administration GUI port 4848 has

been exposed and forwarded from 49599.

In order to access the Glassfish HTTP Administration port from outside

the container on the localhost you would point your web browser to http://localhost:49599. Docker behaves this way so that you can start

multiple containers from the same image, exposing the same ports, on the same

system and not run into port conflicts, as each container will have a unique

external port number for each one of the exposed ports.

To stop the container run:

$>sudo docker stop 733576a1a96a

This will stop the running instance of the

container and you can verify this by running:

$>sudo docker ps

You will see that there are no running containers.

At this point if you instantiate a container from the same

image you will have a new container. Its state will be that of the image, not

the state of the previous container we just stopped. This is an important point

to understand. If we wanted to preserve

the state of the container we just stopped, for example to preserve the log

files or deployed applications, embedded database state etc., we would need to

commit the stopped container.

There’s one caveat, as of Docker v0.6.3 if you commit a

container that has EXPOSEed ports or ENV variables you will lose those settings

and you will have to reset them when you run the new image. The Docker folks are working on making this

more user friendly, however, in the meantime there is a trick you can use to

work around this.

$>sudo docker inspect 733576a1a96a

You will get back something that looks like JSON:

[{

"ID": "beba9cafde507c6040445c49e15d62f0d997238ed4eeb2c30eb1a789406a1247",

"Created": "2013-10-07T22:29:52.848910538-04:00",

"Path": "/usr/local/bin/start-gf.sh",

"Args": [],

"Config": {

"Hostname": "beba9cafde50",

"User": "",

"Memory": 0,

"MemorySwap": 0,

"CpuShares": 0,

"AttachStdin": false,

"AttachStdout": true,

"AttachStderr": true,

"PortSpecs": [

"4848",

"8080",

"8181",

"8686",

"7676",

"3700",

"3820",

"3920"

],

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"HOME=/",

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.7.0_40/bin:/usr/local/glassfish4/bin",

"JAVA_HOME=/usr/local/jdk1.7.0_40",

"GF_HOME=/usr/local/glassfish4"

],

"Cmd": [

"/usr/local/bin/start-gf.sh"

],

"Dns": null,

"Image": "noah/glassfish4",

"Volumes": null,

"VolumesFrom": "",

"WorkingDir": "",

"Entrypoint": null,

"NetworkDisabled": false,

"Privileged": false

},

"State": {

"Running": false,

"Pid": 0,

"ExitCode": 137,

"StartedAt": "2013-10-07T22:29:52.898170009-04:00",

"Ghost": false

},

"Image": "7516aaab6e0f73514eb938627d23079b2b193aea15917b2b77f21b2cb813659e",

"NetworkSettings": {

"IPAddress": "",

"IPPrefixLen": 0,

"Gateway": "",

"Bridge": "",

"PortMapping": null

},

"SysInitPath": "/usr/bin/docker",

"ResolvConfPath": "/var/lib/docker/containers/beba9cafde507c6040445c49e15d62f0d997238ed4eeb2c30eb1a789406a1247/resolv.conf",

"Volumes": {},

"VolumesRW": {}

}]

This is the configuration of the stopped container. When you go and commit it you can use this

configuration in the commit command and it will be preserved in the new image.

For example:

$>sudo docker commit –run=’{

"PortSpecs": ["4848","8080","8181","8686","7676","3700","3820","3920"],

"Env": ["HOME=/",

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.7.0_40/bin:/usr/local/glassfish4/bin",

"JAVA_HOME=/usr/local/jdk1.7.0_40",

"GF_HOME=/usr/local/glassfish4"]}’ 733576a1a96a glassfish4_running

That command will commit a new image preserving the exposed

ports and ENV variables from the stopped container (ID 733576a1a96a). It will

name the new image glassfish4_running and you can start a container from it

using that name.

Conclusion

Docker is still considered beta and in heavy

development. Their site states that it is not production ready, however, that

hasn’t stopped many organizations like eBay, Cloudflare, and Mailgun from

talking about plans for using it in their production environments. RedHat has

just signed a collaboration agreement to bring Docker to RHEL and Fedora.

Projects like Deis, Flynn, and CoreOS are building on top of it and there are

tons of other cool projects that are already using it.

In future blogs I will delve into some of the more advanced

configuration and functionality of Docker and further explore how they can be

leveraged to enhance the Glassfish container and JavaEE ecosystem.

1. Hardware agnostic in this case means any x86_64 platform which supports Linux. Infrastructure agnositc might be a slightly more precise term. The Docker team is actively working on expanding support beyond x86_64 and there has even been some experimenting around supporting a 'fat' image that simultaneously supports multiple architectures.

Similarly, it is possible to run a VM inside a Docker container. For example QEMU has been run inside a Docker container. QEMU is a generic and open source machine emulator and virtualizer. When used as a machine emulator, QEMU can run OSes and programs made for one machine (e.g. an ARM board) on a different machine (e.g. your own PC). By using dynamic translation, it achieves very good performance.

Recent Comments